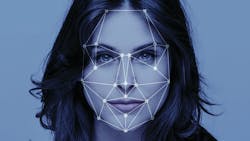

All eyes are turning toward California as lawmakers consider a state-wide ban of facial recognition technology.

Assembly Bill 1215, known as the Body Camera Accountability Act, proposes banning the facial recognition software available in police body worn cameras (BWCs). The proposed bill is the first of its kind and is expected to set in motion a federal-scale ban of the biometric. In fact, a bipartisan U.S. House Oversight and Reform Committee already began pushing to prohibit its use until more legal and regulatory guidance is developed.

The law follows the City of San Francisco’s blanket ban against the use of biometrics. The ruling bars any San Francisco government entity from using facial recognition. Somerville, Massachusetts, has followed suit, while other cities look to do the same.

The legal movement has put manufacturers on a path to address their use of facial recognition. Axon became the first company to ban the biometric in June. The BWC manufacturer’s independent ethics board determined “face recognition technology is not currently reliable enough to ethically justify its use on body worn cameras. At the least, face recognition technology should not be deployed until the technology performs with greater accuracy and performs equally well across races, ethnicity, genders and other identity groups.”

“We are at

the tip of the iceberg in terms of what face recognition and other biometrics can do.”

— Matt Parnofiello, Public Safety Strategist for CDW Government

As a public safety strategist for CDW Government, Matt Parnofiello specializes in applied field technologies for law enforcement. He has been watching the facial recognition debate play out. While he believes the end result will be better and more accurate technology, in the meantime, any ban leave police without a valuable tool.

Even so, Parnofiello remains optimistic. “There are a trio of factor, technology, the law and social acceptance, in play here,” says Parnofiello. “Currently, they are at odds with each other. But we have plenty of examples of once controversial technologies in use today; technology that was once banned or frowned upon, that is now commonly accepted by everyone. These laws will not stand forever,” he says.

Benefits of biometric technology

Face recognition and other means of biometrics benefit law enforcement by enabling officers to move from reactive to proactive policing.

Parnofiello uses the following theoretical example to explain possible biometric uses and to illustrate where public fear steams from. In theory, he says, an officer could walk down the street with his body camera on; though the camera is not set to record, it could still view and process the scene, just like the officer. The difference is the body camera is linked to criminal justice information databases. If a wanted subject or person of interest walks by, the camera could alert the officer, provide a picture, and give additional details like name, address, outstanding warrants, criminal alliances and suspected crimes. The situational intelligence could prompt the officer to act, solve, or prevent a crime. “That’s the theoretical example [it’s not happening today],” Parnofiello states. “But there are actual examples of law enforcement’s use of face recognition.”

In April, a California law enforcement officer saw a National Center for Missing and Exploited Children Facebook post containing a picture of a missing child. The officer took a screenshot of the image and fed it into a tool created by nonprofit Thorn. The tool, called Spotlight, uses text and image processing algorithms to match faces and other clues in online sex ads with other evidence.

The search quickly returned a list of online ads featuring the girl’s photo. It set an investigation in motion and police found the victim in weeks. “This is something that could not have happened without facial recognition technology,” Parnofiello states. “This is a perfect example of what officers will not be able to do if they cannot use the technology.”

U.S. Customs and Border Patrol (CBP) also relies on the technology at the border and at some international airports. The tool compares a person’s likeness, captured as they walk by, to a Passport or Wanted Person photo on file. If the system detects an impostor, it alerts authorities, who then screen the individual more thoroughly.

Facial recognition systems also help police secure schools, stadiums and other public places. If they receive information about a specific threat on-site, they employ face recognition to scan the crowd for the person in question. The system automatically alerts officers when the individual arrives. Inside police headquarters, face recognition improves department security as it gives officers access to the building and department computers.

“We are at the tip of the iceberg in terms of what face recognition and other biometrics can do,” Parnofiello stresses.

Surveillance technology capabilities are growing at lightning speed. Philadelphia-based ZeroEyes for instance, markets a product that uses artificial intelligence to actively monitor surveillance camera feeds to detect individuals carrying a gun or other weapon. The software integrates with surveillance camera systems and is already installed in several U.S. schools. The CBP is also considering the tool.

“If you can detect a face, car, all these different objects through cameras, we can detect guns and send alerts to decrease response times for first responders and mitigate the threat from active shooters,” said ZeroEyes CEO and Navy Seal Mike Lahiff in a press release.

“We call it object detection, and it deals with threats to safety, like people falling down, pulling out a knife or a gun, someone who appears stressed out, is sweating or twitching; people twitch a lot when they are about to do something,” says Chris Ciabarra, chief technology officer at Athena Security.

Smart cities provide an excellent example of where the technology is heading. Cities are installing surveillance cameras in strategic areas within their downtowns and connecting them to law enforcement real time crime information centers and fusion centers. Though the centers do not currently utilize face recognition technology, many look to do so in the future. “This will happen one of two ways: Either they will receive legal guidance from the powers that be or it will happen once the technology becomes ubiquitous to the point where they can use it,” Parnofiello says. “Body cameras were viewed the same way a decade ago. But nowadays many patrol officers don’t want to start their shift without them.”

Address the concerns

The question then becomes what is the reasonable expectation of privacy in public places? And what kind of accuracy is acceptable for facial recognition technology?

After Axon announced its decision to ban face recognition capabilities, Matt Cagle, technology and civil liberties attorney at the ACLU of Northern California, commented: “Body cameras should be for police accountability, not surveillance of communities. One of the nation’s largest supplies of police body cameras is now sounding the alarm and making the threat of surveillance technology impossible to ignore. The California legislature, and legislatures throughout the country, should heed this warning and act to keep police body cameras from being deployed against communities. The same goes for companies, like Microsoft and Amazon, who also have an independent obligation to act, as Axon did today. Face surveillance technology is ripe for discrimination and abuse, and fundamentally incompatible with body cameras—regardless of accuracy.”

“The challenge for citizens is that you can essentially be identified anywhere, anytime, by anyone. The fear is that there is no guidance.”

— Matt Cagle, Technology and Civil Liberties Attorney, ACLU of Northern California

A Georgetown Law Study also sounded an alarm when it revealed most American adults are in a police face recognition database already; a fact Parnofiello concurs with. “The challenge for citizens is that you can essentially be identified anywhere, anytime, by anyone. The fear is that there is no guidance. There are very few laws [pertaining to face recognition], and essentially that is the problem,” he says.

Accuracy concerns also persist. Controversy grew after a Massachusetts Institute of Technology study found face recognition technology fails on faces of women and people of color. Other studies revealed similar issues. A 2018, ACLU study found Amazon Rekognition falsely matched 28 members of Congress to mugshot photos, and noted the failures impacted faces of color most of all.

Barry Friedman, director of the Policing Project at New York University School of Law and member of Axon’s ethics board, warned in a statement of the “very real concerns about the accuracy of face recognition, and biases” and noted that “until we mitigate those challenges, we cannot risk incorporating face recognition into policing.”

Where we go from here

As the debate over the use of facial recognition rages on, the question becomes: Where do we go from here?

Parnofiello suggests answers are coming as the FBI’s Criminal Justice Information Systems (CJIS) Division examines police use of facial recognition and facial identity. CJIS, he notes, considers facial identity as protected and wants it handled like law enforcers already treat other personally identifiable information such as fingerprints, criminal records, etc. Agencies must comply with CJIS Security Policy in their mobile communications and data use to receive approval to access federal databases.

Kimberly J. Del Greco, deputy assistant director of the CJIS, explained this concept further in testimony before Congress. She said, “Facial recognition is a tool that, if used properly, can greatly enhance law enforcement capabilities and protect public safety, but if used carelessly and improperly, may negatively impact privacy and civil liberties. The FBI is committed to the protection of privacy and civil liberties when it develops new law enforcement technologies. Therefore, when the FBI developed the use of facial recognition technologies, it also pioneered a set of best practices, so that effective deployment of these technologies to promote public safety can take place without interfering with our fundamental values.”

Del Greco said the FBI adheres to this standard by:

- Strictly governing the circumstances in which facial recognition technology may be used, including what probe images can be used.

- Using facial recognition technology for law enforcement purposes with human review and additional investigation. A positive match with facial recognition does not lead to an arrest. It produces a possible lead that requires investigative follow up to corroborate the lead before any action is taken.

- Having trained examiners at the FBI examine and evaluate every face query to ensure the results are consistent with FBI standards.

- Regularly testing, evaluating and improving facial recognition capabilities. The FBI has also partnered with NIST to ensure algorithm performance is evaluated.

In other words, federal guidance already exists for the biometric, but those banning facial recognition tech desire more limits and greater accuracy. FBI standards can aid police departments as they set up policies governing for face recognition, but only if the technology is allowed in the first place.

Ciabarra expresses his concerns over the bans: “When it comes to people’s safety, you have to be able to keep watch. Why would you take away a technology that keeps people from getting hurt? Why would you prevent police from using technology that helps them do their jobs? Face detection helps stop and solve crimes, and it can save people’s lives!”

The conversation stirred up by the bans, however, isn’t closing the door to its use. Rather, Parnofiello believes it is opening the door to a better future for face recognition use. No one is questioning its benefits for law enforcement, but he says standards, policy and the law must keep pace with the technology.

In a New York Times article about San Francisco’s recent ban of face recognition tech, Joel Engardio, the vice president of Stop Crime SF, agreed, noting he felt the city should not prohibit the biometrics’ use in the future. “Instead of an outright ban, why not a moratorium?” he asked. “Let’s keep the door open for when the technology improves. I’m not a fan of banning things when eventually they could be helpful.”

Ronnie Wendt is a freelance writer based in Waukesha, Wis. She has written about law enforcement since 1995.

About the Author

Ronnie Wendt

Owner/Writer, In Good Company Communications

Ronnie Wendt is a freelance writer based in Waukesha, Wis. She has written about law enforcement and security since 1995.